In this latest blog post, my growing love affair with FME and AI continues as I look at how the combination of FME and AI can banish everyday drudgery.

Having recently gone to a restaurant in Auckland only to find the road it was located on was closed, I wondered (whilst enjoying a spicy Chana Masala) how I might automate the process by which restaurant owners could be notified of road closures outside their restaurant.

My wife, finding the subject somewhat less engaging suggested I consider the problem at a later date. She had a point.

The later date arrived and I started to think about the problem. I’d need to know in advance when roads were due to be closed and fortunately Auckland Transport provides a ArcGIS Rest endpoint which includes the road segment geometry, the date and time of the closure and the reason why.

I’d also need a dataset of restaurants in Auckland which was surprisingly difficult to find. I had to settle on a free CSV Point of Interest (POI) dataset from OpenStreetMap which defined different point types but only provided latitude and longitude coordinates.

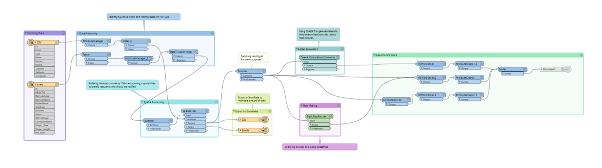

With the necessary datasets in hand, the next step was to design a workflow that effectively integrate these diverse sources.

Firstly, I imported both datasets and ran a bit of data processing to ensure that the data was constant. In particular, the road closure dataset had multiple date formats that needed to be cleansed using the DateTimeConverter. I also ran a Tester to filter the CSV data and pull-out restaurants, pubs, fast food and food courts.

Having cleaned the data I then needed to select only those restaurants that were impacted by the road closure. To do so, I used the Bufferer that created a buffer of 100 meters around the closed road segments and then a SpatialFilter to select the restaurants that were inside the buffer.

As mentioned, the POI dataset didn’t include the address of the restaurant which I would also need so I ran the Geocoder in reverse mode using the OpenCage API to find the address based on location.

So far so good.

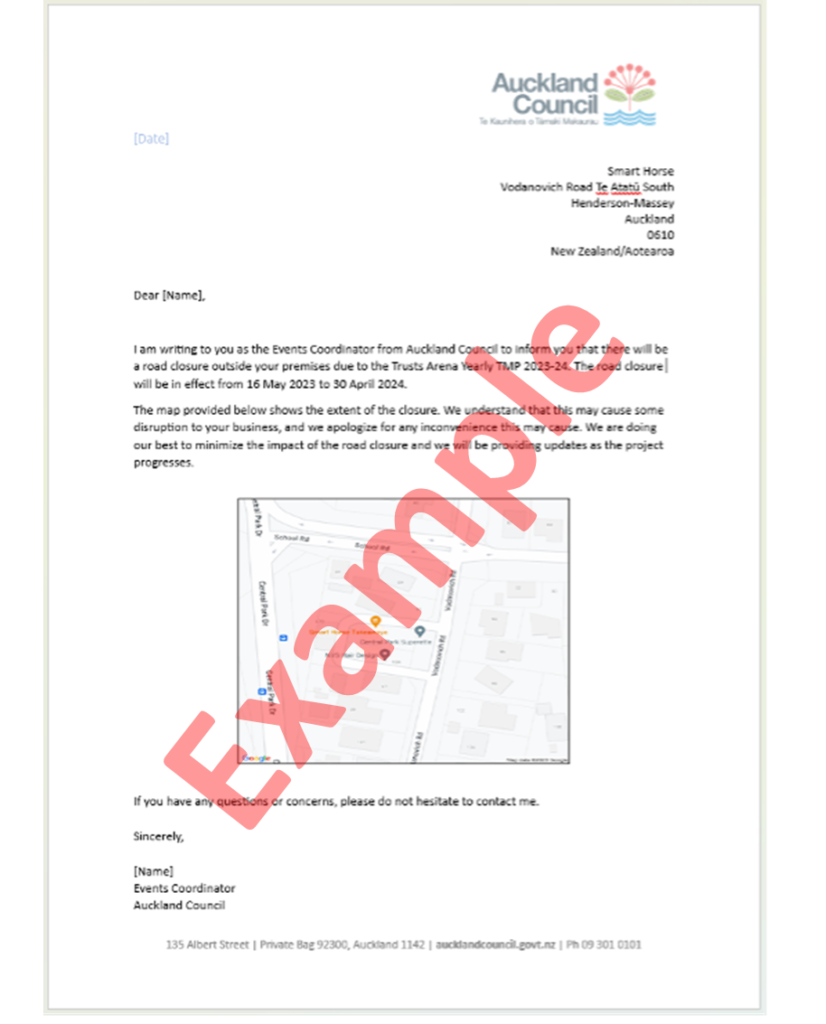

I then wanted to use the Open.AI ChatGPT Transformer to write letters addressed to the restaurant owners notifying them of the reason for the road closure. As a Large Language Model ChatGPT does this very well with human like sentence structures. Additionally, by feeding in the start and end date of the road closures ChatGPT was able to structure the letter to include these details as well.

The letter however lacked some visual context. It was in fact missing a map of the road closure location, so I used the StaticMapFetcher to create a map of each road closure which would then be embedded into the letter.

Next, I used the MSWordStyler to create a word template, pretending that I was from Auckland Council (which clearly I am not). I then embedded the various aspects of text that ChatGPT had created and the maps from the StaticMapFetcher, and boom, I could automatically create multiple word documents ready to send to respective restaurateurs and café owners whenever they were affected by a road closure. Job done… or is it?

It would be annoying to have to manually run the process. I mean what’s the point of automation if some of it has to be manual. So I wondered if I could use FME Flow to automatically run the letter generator every time there was a new road closure. It turns out I could, or at least I could if the Auckland Transport Feature Service was configured with a Webhook that FME Flow would listen for.

That configuration isn’t in place at the moment but had that been set up, then the process could be fully automated with word documents emailed to me whenever a road was closed. All I would have to do was review it and send it.

So FME assigns another bit of drudgery to the done pile.

Sometimes be hard to find suitable images for blog posts, but rather than worry about it I thought I would use Leap.AI to create some images for me. I asked for an 8K picture of café customers being disrupted by construction work. The AI didn’t quite get it right, but I love the output, nevertheless.